Sometimes, I think that we here at HubSpot are just a bunch of mad scientists.

We love to run experiments. We love to throw bold ideas at the wall to see if they stick, tinkering with different factors, and seeing how what happens can be incorporated into what we do every day. To us, it’s a very hot topic -- we’re writing about it whenever we can, and trying to lift the curtain on what, behind the scenes, we’re cooking up on our own marketing team.

But we’re going to let you in on yet another secret: Experiments are not designed to improve metrics.

Instead, experiments are designed to answer questions. And in this post, we'll explain some fundamentals of what experiments are, why we conduct them, and how answering questions can lead to improved metrics.

What Is an Experiment?

In a forward-thinking marketing environment, it's easy to forget why we run experiments in the first place, and what they fundamentally are. That's why we like referring to the term design of experiments, which refers to "a systematic method to determine the relationship between factors affecting a process and the output of that process." So, much like the overall point of conducting experiments in the first place, this method is used to discover cause-and-effect relationships.

To us, that informs a lot of the decision-making process behind experiments -- especially whether or not to conduct it in the first place. In other words, what are we trying to learn, and why?

Experiments Are Quantitative User Research

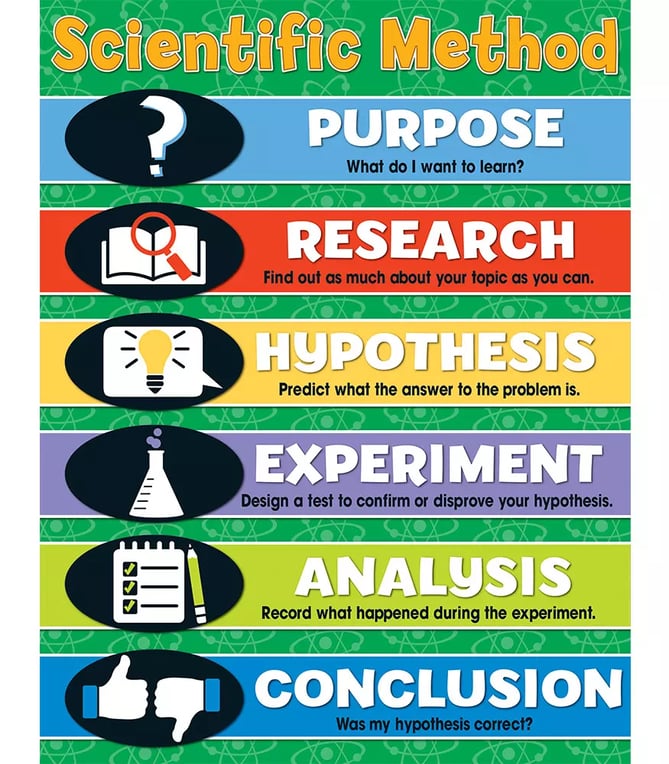

From what I’ve seen around the web, there seems to be a bit of a misconception around experimentation -- so please, allow me to set the record straight. As marketers, we do not run experiments to improve metrics. That type of thinking actually demonstrates a fundamental misunderstanding of what experiments are, and how the scientific method works.

Source: Carson-Dellosa Publishing

Source: Carson-Dellosa Publishing

Instead, marketers should run experiments to gather behavioral data from users, to help answer questions about who these users are and how they interact with your website. Prior to running a given experiment, there may have been some misinformed assumptions about users. These answers challenge those assumptions, and provide better context to how people are using your online presence.

That’s one thing that makes experimentation such a learning-centric process: It forces marketers to acknowledge that we might not know as much as we’d like to think we do about how our tools are being used.

But if it’s your job to improve metrics, fear not -- while the purpose of experimentation isn’t necessarily to accomplish that goal, it still has the potential to do so. The key to unlocking improved metrics is often the knowledge gained through research. To shed light on this, let’s take a closer look at assumptions.

Addressing Assumptions

In reality, many marketers build an online presence based on what I call a "pyramid of assumptions." That often happens when we don’t thoroughly answer the following questions prior to that build:

- Do we know who is finding a particular page?

- Do we know why they are visiting a particular page?

- Do we know what they were doing before visiting a particular page?

When you think about it, it’s kind of a looney concept to build online assets without these answers. But hey -- you’ve got things to do. There are about a hundred more pages that you need to build after this one. Oh, and there’s that redesign that you need to get to. In other words, we understand why marketers take these shortcuts and make these assumptions when facing the pressure of a deadline. But there are consequences.

If those initial, core assumptions are wrong, conversions might be left on the table -- and that’s where experimentation serves as a potential opportunity to improve metrics. (See? We wouldn’t leave you hanging.) The data you collect from experiments helps you answer questions -- and those answers give you context around the customer journey. This context helps you make more educated decisions on what to build, and why. In turn, these educated decisions help to improve metrics.

Experimental Design: A Hypothetical Framework

The Experiment Scenario

Let’s say you’ve been hired as the marketing manager for the fictional Ruff N’ Tumble Boots Co., a.k.a., RNT Boots. Now, let’s say this brand has a product detail page for its best-selling boot, which goes in depth as to why this particular boot is perfect for the most intense hikes. This page’s conversion rate is “okay,” at best, with about 5% of visitors purchasing the boot. And overall, the page is well-designed.

But did you catch the assumption that this page makes? It was built with the presuming mentality of, “People are buying the boot for hiking.” Core assumptions, like this one, must always be validated on high-value pages.

The hypothesis: By modifying the value proposition of the product details pages, we’ll be able to observe any difference in purchase rate between them and build better product pages with higher conversion rates.

The objective: In the scientific method, your objective should be to answer a question. Here, our objective is to learn the optimal way to position the boot product in our online store. Improving metrics is a potential downstream effect from gaining this learning.

Success indicators: If this experiment shows a difference in purchase rate between value propositions of +-3 percentage points or more, we will consider it successful. A result of this degree will signal whether people purchase this boot for hiking -- or not.

The Experimental Design

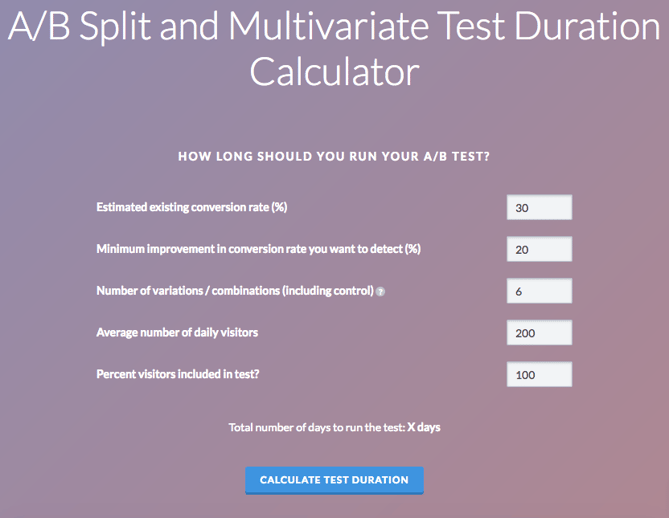

First, we need to determine whether our experiment can hit statistical significance within a reasonable amount of time -- one week or less. Here’s a calculator that can help with this step.

Source: VWO

Source: VWO

Then, we need to establish the control of our experiment: the untouched, unmodified, pre-existing assumption that the value proposition of product pages should be activity-focused. In this case, that activity is hiking.

Next, we’ll determine three variants, or experiment groups pertaining to product page value propositions:

- Variable A: Work or task; e.g., construction or landscaping tasks.

- Variable B: Seasonal; e.g., snow, mud, or hot weather.

- Variable C: Lifestyle; e.g., these boots fit into and are imperative to a lifestyle that is athletic and outdoorsy, but also trendy.

The Results

This experiment has three likely outcomes.

1) The control group will outperform the variant groups.

This isn’t necessarily bad -- it means that the experiment has validated your preexisting assumption about the product page’s value proposition. But, it also means that the value proposition isn’t what’s causing the less-than-stellar conversion rate. Document and share these results, and use them to figure out how you will determine the cause.

2) One or more variant groups will outperform the control group.

Wowza -- you just learned that most people do not visit this page to purchase the boot for hiking. In other words, the experiment has invalidated your preexisting assumption.

Again, document and share the results, and figure out how you’ll apply these findings. Should you fully swap out the value prop of the product details page? Should you also move the needle on your target audience? These are the questions that should stem from an outcome like this one.

3) Nothing -- all groups perform about the same.

When nothing changes at all, I like to always take one step back, and ask a few clarifying questions:

- Did different buyer segments show contrasting behavior on different variants?

- Are these segments evenly distributed?

- Could it be that none of these value propositions are resonating with our audience?

- Are they not resonating because users arrive at the product page already having decided to buy the boots?

In sum, according to your hypothesis, this outcome is inconclusive. And while that means going back to the drawing board and redesigning your experiment (and its variables), use the answers to the above questions to guide you.

If you’re not eager to jump back into another experiment -- after all, they can require resources like time and labor -- there are some alternatives to consider.

Was an experiment the only way to challenge these assumptions?

Aha -- here we are, back at the great do-we-need-to-run-this-experiment question. The honest answer: Probably not.

There are other tactics that could have been applied here. For example, something like a quick survey or seamless feedback tool could be added to the purchase flow of the boot.

However, that type of tactic is not without its flaws. Sometimes, for instance, there’s a difference between what people say on a survey, and the behavior they actually carry out.

As marketers, we sometimes refer to this concept as the “lizard brain”: When someone might say that they plan to use the boots for hiking, but in reality, is more likely to purchase the boots if he can be convinced that he’ll look good in them -- which creates a degree of consumer bias.

So if you’re going to “build a story with surveys and interviews,” as my colleague, HubSpot Tech Lead Geoffrey Daigle puts it, “validate that story with data."

Your Experimentation Checklist

Now that you have all of the context on what experiments are, why we run them, and what we need to run them, let’s circle back and answer the original question: When should you run an experiment? Here’s your criteria:

- A page has enough traffic volume to statistically prove experiment results within a reasonable amount of time.

- The page is built on top of unvalidated assumptions, and you’ve identified:

-

- What the unvalidated assumptions are.

- The stability of those assumptions.

- You are unable to validate assumptions by leveraging other methods.

And as for determining alternatives to experiments, here's a checklist of questions to ask:

- Do you have existing quantitative or qualitative data that you can leverage?

- Can you run a quick survey?

- Can you run a moderated user test?

- Can you run a user interview?

How do you approach the decision-making process and design of experiments? Let us know about your best experiments in the comments --and hey, we might even feature it on our blog.

from HubSpot Marketing Blog https://blog.hubspot.com/marketing/design-of-experiments

No comments:

Post a Comment